Lab Tours

Infant Cognitive Development Lab

Hosts: Jena Blegen, Rebecca Woods

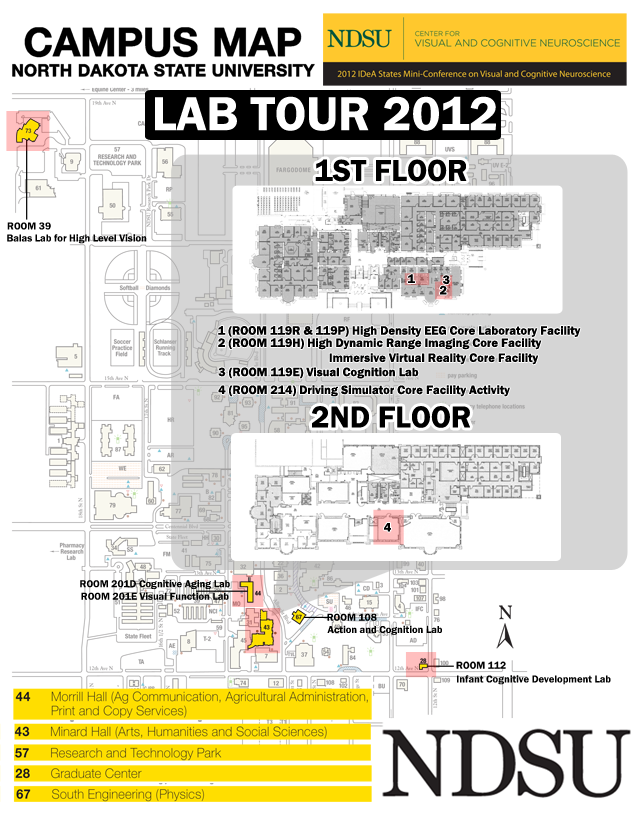

Location: Graduate Center 112

Learning, attention, and memory testing of 3- to 24-month-old infants using look time, object exploration, and search behavior. The facility includes three lab rooms equipped with live- and video-coding software.

Balas Lab for High Level Vision

Host: Ben Balas

Location: Technology Incubator Room 39

The Balas Lab for High-Level Vision is equipped to carry out behavioral and electrophysiological studies of face, object, and texture perception. The centerpiece of the lab is an Electrical Geodesics 64-channel EEG system that we use for a wide range of ERP tasks in adults and 3-6 year old children. The lab is equipped to measure traditional ERPs, perform source localization on EEG data, and implement time-frequency and single-trial classification techniques as well. The Balas Lab also houses a NaturalPoint facial motion capture system, which can be used to capture natural facial gestures in real-time. Come for a visit and meet our mascot, Norman P. Bear, who plays a key role in welcoming children (and their parents) to the lab!

Host: Laura Thomas

Location: South Engineering 108

Research in the Action and Cognition Lab incorporates approaches from both vision science and embodied cognition to study the ways in which physical actions affect key components of cognition such as problem solving, memory, and attention.

Host: Wendy Troop-Gordon

Location: Minard 119E

In the Visual Cognition Lab, we focus on questions having to do with the mental representation of visual information. In particular, we focus on what people represent about complex scenes and objects, and the role of attention in the development of those representations. We investigate these issues using a number of techniques, including psychophysical techniques, eyetracking, driving simulation, and EEG. The lab tour will feature a demonstration of how our lab, in collaboration with Wendy Troop-Gordon, is using eye tracking to examine attentional biases among youth exhibiting high and low levels of social and mental health difficulties.

Hosts: Kyle Sundberg, Marcus Mahar, Zach Leonard, Mik Ratzlaff, Mark Nawrot

Location: Morrill 201

The Visual Function Laboratory uses psychophysical methods to study the neural mechanisms for the visual perception of depth. Demonstrations will include: motion parallax displays with head movements, paradigms for the comparison of motion parallax and binocular stereopsis and motion parallax and perspective information, ocular-following eye movements in response to radial optic flow on an E-lumens screen, and depth perception with night vision goggles.

Hosts: Linda Langley, Nora Gayzur, Alyson Saville, Hannah Ritteman, Marcus Mahar

Location: Morrill 201D

The Cognitive Aging Lab, directed by Linda Langley, is dedicated to the study of cognitive functioning in later life. We focus on age-related changes in attention and visual search. Current questions of interest are: How do age-related changes in the brain influence older adults' attention? What types of cognitive training programs benefit older adults? How does attention impact everyday abilities such as driving? How does meaningfulness impact how young and older adults attend to information?

High Density EEG Core Laboratory Facility

Host: Tom Campbell

Location: Minard 119R, Minard 119P

CVCN core facilities include Polhemus Patriot digitization equipment for recording electrode positions, alongside two electrically- and acoustically-shielded EEG laboratories with 168-channel Biosemi ActiveTwo systems for EEG/EOG recordings. One lab is equipped for laser stimulation of peri-hand and extra-personal space, whilst EEG is recorded to determine how the brain differentially processes visual stimulation proximal and distal to the hand. The second laboratory is equipped for the investigation of the neural basis of the translational Vestibulo-Ocular Reflex (tVOR) and Smooth Pursuit Eye Movements (SPEM), when the participant is stationary or translated in the inter-aural plane. Kinematic information is recorded alongside EOG and EEG from a new design of 160-channel electrode array that covers the scalp and neck. Kinematic information is then used in a process intended to reduce motion (piezoelectric cable sway, mechanical electrode movement), electromyographic (posture-corrective neck muscle), and electro-ocular (eye-movement) artefact. Analysis of EEG source generators is then used to investigate the neural basis of tVOR and SPEM.

High Dynamic Range Imaging Core Facility

Hosts: Mark McCourt, Barbara Blakeslee, Ganesh Padmanabhan

Location: Minard 119H

The CVCN recently acquired a 2nd generation high dynamic range display, the HDR47E (Sim2 Multimedia, S.p.A.). This 47 inch LCD Display with 2202 High Power LEDs backlight elements, has a peak luminance in excess of 4,000 cd/m2, and a resolution of 1920 x 1080 pixels. This display allows researchers to test human vision under fully photopic (real world) conditions, and will be used in studies of brightness/lightness perception (with Barbara Blakeslee), as well as in studies assessing the potential of HDR technology as a low vision aid in clinical populations, such as age-related maculopathy (a collaboration with a local ophthalmologist, Max Johnson, M.D., Retina Associates).

Immersive Virtual Reality Core Facility

Hosts: Ganesh Padmanabhan, Mark McCourt

Location: Minard 119H

The CVCN immersive virtual reality core facility is based on a new head-mounted display, the NVIS nVisor SX111 which has replaced/supplemented the previous NVIS nVisor SX60. The SX111 is brighter, has a higher contrast ratio, and most importantly a much larger field of view (102o H x 64o V). One study under development concerns visuohaptic multisensory integration. We have ordered a custom-built programmable 10-channel vibrotactile stimulator (Psyal, Ltd), and a dataglove (CyberGlove II 18 sensor). Thresholds and/or reaction time measures can be obtained to visual and haptic stimuli located in space relative to an avatar hand which is position and orientation tracked in real-time. Examples of stimuli using the avatar hand stimulus will be presented, as well as examples of scenarios used recently to conduct a task-switching study showing that switch costs can be greatly reduced if environmental switch cues are augmented in VR.

Driving Simulator Core Facility

Host: Robert Gordon, Enrique Alvarez

Location: Minard 214

The NDSU CVCN Driving Simulation Core facility is based on a DriveSafety DS-600c driving simulator. Several studies are being conducted by CVCN faculty and students which utilize this facility. One study concerns driving behavior of older drivers, middle age drivers, and young drivers on simulated rural roadways. This project was partially funded by the Federal Highway Administration, and represents a collaborative effort between the CVCN and the NDSU Upper Great Plains Transportation Institute. A second project represents collaboration between the NDSU CVCN and researchers from the Fargo Neuropsychiatric Research Institute. A third project is being conducted by Drs. Linda Langley and Robert Gordon under the auspices of an ARRA Administrative Supplement to the parent COBRE where driving behavior is being assayed as an outcome measure in a study to test the efficacy of video game training as a tool for cognitive rehabilitation in older adults.